Data for Physical AI

Partnering with leading Physical AI labs and companies for custom data needs

Backed by

The only data partner you need

Purpose built for Physical AI

We specialise in building data for robotic and world foundation models

Control and Visibility

We are building processes to ensure that you have maximum possible observability and control over data collection modalities

Customization

Any collection strategy, any possible annotations, we have got it covered.

Data customised for your needs

Teleoperation in Real world and Isaac Sim

High-quality trajectory data for multiple hardware forms (UR10/Franka/dexterous arms) with multiple input modalities (Spacemouse, Meta Quest, SO-ARM101) collected by human operators performing complex manipulation tasks. For real world data, we are also experimenting with Real2Sim pipelines. Human collected trajectories are further augmented using Cosmos.

Human Ego-centric and UMI data

Scaling out locomanipulation ego-centric data collection across home service units and grocery stores. Additionally, we are training operators to record failure case demonstrations - one of the key samples underrepresented in today's datasets.

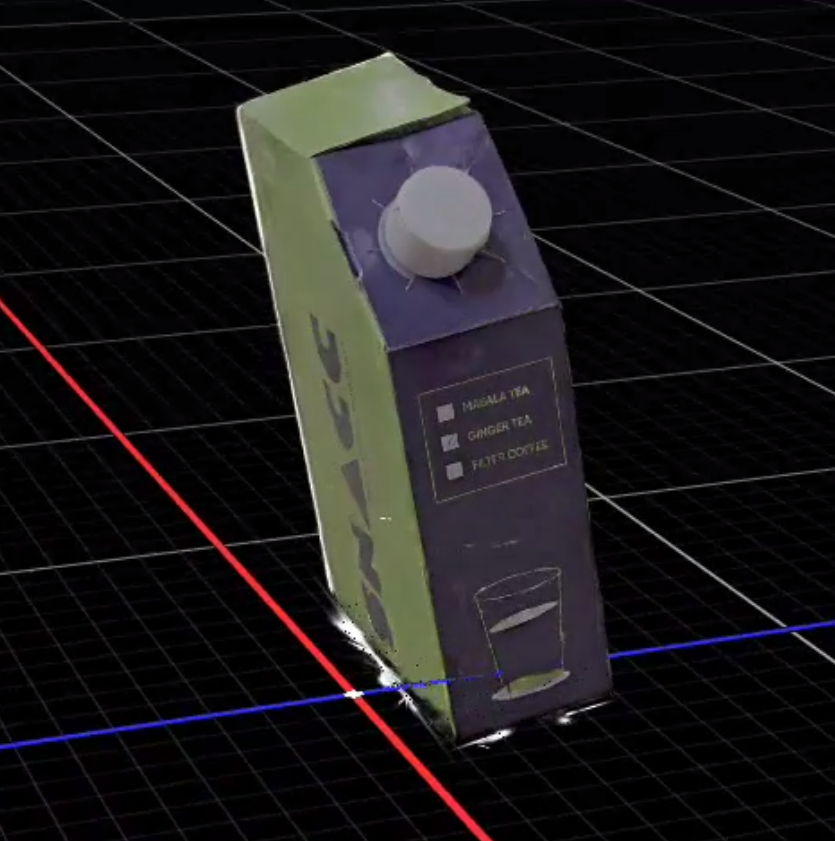

Photorealistic 3D Assets

We are reconstructing highly photorealistic 3D assets for use in robotic simulation and training vision transformers with only RGB frames from an iPhone camera. Post being created, each asset is cleaned and verified by our human artists.

Meet the team